Using the Web Audio API you can create and process sounds in any web application, right inside the browser.

The capabilities of the Web Audio API are governed by a W3C draft standard. It was originally proposed by Google and has been under development for several years. The standard is still being worked on, but the API is already widely implemented across desktop and mobile browsers. It is something we can use in our applications today.

This is an introduction to a series I'm writing about making sounds and music with the Web Audio API. It is written for JavaScript developers who don't necessarily have any background in music or audio engineering.

What Can I Use Web Audio For?

If you're like me and mostly get paid to make business applications, the use cases for Web Audio don't always seem obvious. If your users are mostly looking at data and filling in forms, chances are they're not going to appreciate the explosion effects you trigger during form validation, or that elevator music you included in an effort to improve their mood.

But there are many kinds of applications where audio is very much front and center.

- Games are possibly the most obvious use case for Web Audio. If you want an immersive game experience, your going to need sound effects and/or music.

- Apps that use any kind of (possibly live) streaming video or audio often need Web Audio. This includes video and audio chat as well as broadcasting.

- Telepresence applications and other virtual reality apps built on WebVR are a new exciting possibility on the web platform, and sound is a very important part of them.

- Many apps may use sounds for effects. Learning applications and all kinds of children's applications come to mind.

- Music is obviously a very important use case. This includes both listening to recorded or live music, and creating music by way of synthesizing it, sequencing it, or performing it using MIDI peripherals.

- You can also make audiovisual art by combining music or sounds produced with Web Audio with visuals made with 2D canvas graphics, 3D WebGL, or SVG.

There's also a use case document from the W3C that describes some of the intended uses of Web Audio in detail.

What's Included?

At the heart of the Web Audio API is a number of different audio inputs, processors, and outputs, which you can combine into an audio graph that creates the sound you need.

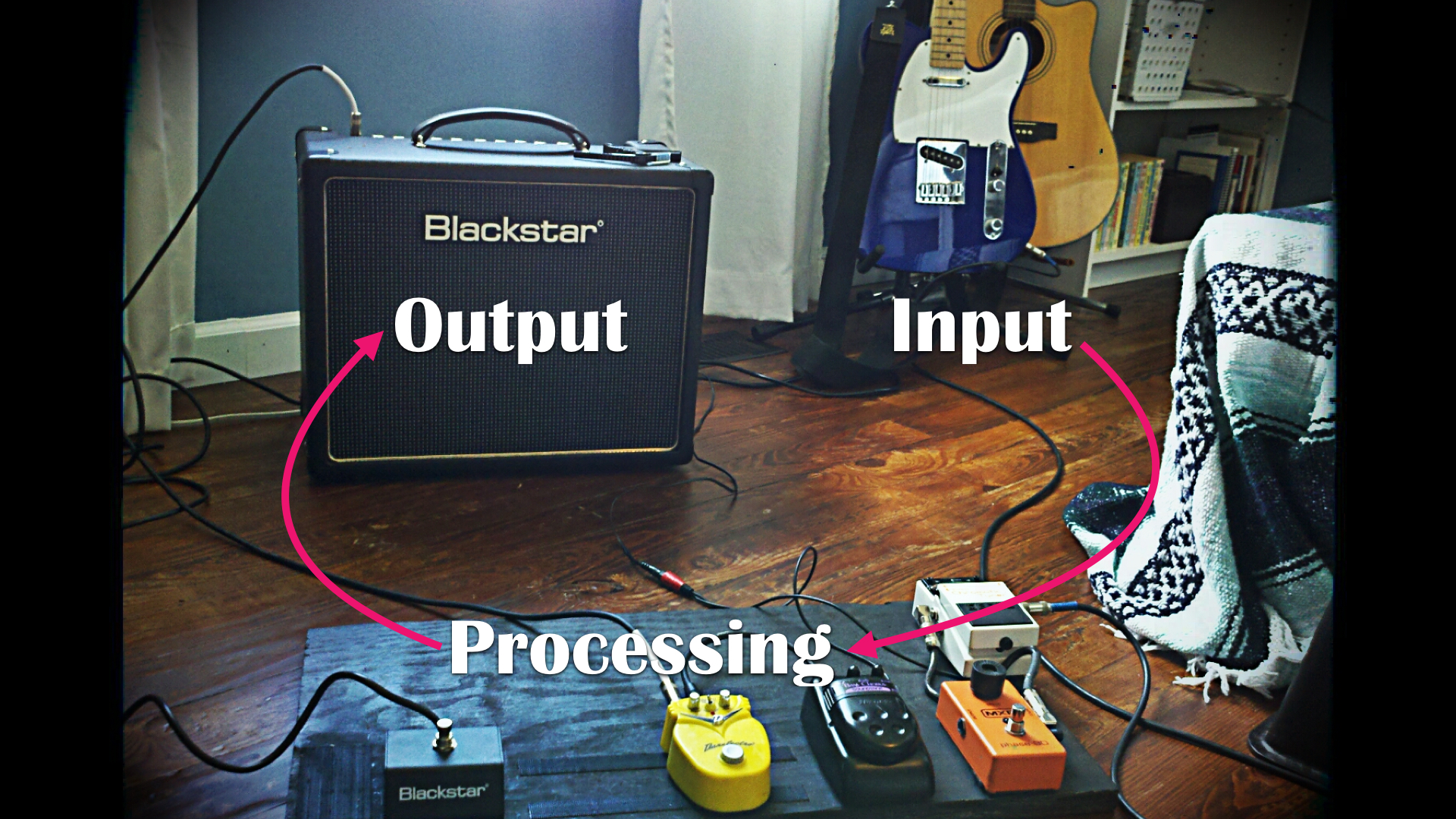

Conceptually it is very similar to the setup of an electric guitarist: The guitar and its pickup microphones form the sound input which generates an audio signal. The signal then flows through a chain of effects that are connected together. The effects modify the signal in various ways, and can be tweaked at runtime by pressing pedals and tweaking knobs. Finally the signal reaches the amp, which outputs the sound.

In Web Audio we have the following inputs, processing nodes, and outputs to choose from:

Input

Buffer Sources

When you have prerecorded sound sample sitting in a file (like an .mp3 or a .wav), you can load it onto your application with an XHR, decode it into an AudioBuffer, and then play it back with an AudioBufferSourceNode.

These things are basically just wrappers for Float32Arrays. They may easily take up a lot of RAM, so they can't be very long.

Media Element Sources

You can grab the audio signal from an <audio> or <video> element into a MediaElementAudioSourceNode.

These elements support streaming so, unlike with buffer sources, their content can be arbitrarily long.

Media Stream Sources

You can use Media Streams to take local microphone input or the audio stream from a remote WebRTC peer and feed it to a MediaStreamAudioSourceNode.

Oscillators

Instead of taking some existing sound signal, you can also pull sounds out of thin air by synthesizing them using OscillatorNodes.

You can use oscillators to make continuous sine, square, sawtooth, triangle and custom-shaped waves.

Processing

Gains

You can control volume with GainNodes. Just set the volume level, or make it dynamic with fade ins/outs or tremolo effects.

Filters

Filters allow you to adjust the volume of a specific audio frequency range. BiquadFilterNode and IIRFilterNode support many different kinds of frequency responses. These are the building blocks for a great variety of things: Cleaning up sound, making equalizers, synth filter sweeps, and wah-wah effects.

Delays

DelayNodes allow you to hold on to an audio signal for a moment before feeding it forward. You can make echoes and other of delay-based effects. Also useful for adjusting audio-video sync.

Stereo Panning

With StereoPannerNode you can move sounds around in the stereo field: To the left ear, right ear, or somewhere in between.

3D Spatialization

With PannerNode you can move sounds around not just in the stereo field, but in 3D space. Make things louder or quieter based on whether they're "near" or "far" and whether they're projecting sound in your direction or not. Very useful for games, virtual reality apps, and other apps where you need to match the positions of sound sources with visuals.

Convolution Reverb

The ConvolverNode lets you make things sound like you're in a physical space like a room, a music hall, or a cave. There's no shortage of options given the huge amount of impulse responses freely available online. In games you can emulate different physical spaces. In music you can add ambience or emulate vintage gear.

Distortion

You can distort sounds using WaveShaperNodes. Mostly useful in music, where distortion can be used in many kinds of effects, ranging from an "analog warmth" to a filthy overdrive.

Compression

You can use a DynamicsCompressorNode to reduce the dynamic range of your soundscape by making loud sounds quieter and quiet sounds louder. Useful in situations where certain sounds may pile up and overtake everything else, or conversely not stand out enough.

Custom Effects: AudioWorklets

When none of the built-in processors does what you need, you can make an AudioWorklet and do arbitrary real-time audio processing in JavaScript. An AudioWorklet is similar to a Web Worker in that they run in a separate context from the rest of your app. But unlike Web Workers, they all run on the audio thread with the rest of the audio processing.

This is a new API and not really available yet. Current apps need to use the deprecated ScriptProcessorNode instead.

Channel Splitting & Merging

When you're working with stereo or surround sound, you have several sound channels (e.g. left and right). Usually all of the channels go through the same nodes in the graph, but you can also process them separately. Split the channels, route them to separate processing nodes, and then merge them back again.

Analysis & Visualization

With AnalyserNode you can get real-time read-only access to audio streams. You can build visualizations on top of this, ranging from simple waveforms to all kinds of artistic visual effects.

Output

Speakers

The most common and most obvious destination of your Web Audio graph is the user's speakers or headphones.

This is the default AudioDestinationNode of any Web Audio context.

Stream Destinations

Like its stream source counterpart, MediaStreamDestinationNode provides access to WebRTC MediaStreams.

You can send your audio output to a remote WebRTC peer, broadcast to many such peers, or just record the audio to a local file.

Buffer Destinations

You can construct an OfflineAudioContext to build a whole Web Audio graph that outputs to an AudioBuffer instead of the device's speakers. You can then send that AudioBuffer somewhere or use it as a source in another Web Audio graph.

These kinds of audio contexts will try to process audio faster than real time so that you get the result buffer as quickly as possible. They can be useful for "prerendering" expensive sound effects and then playing them back multiple times. This is similar to rendering a complex visual scene on a <canvas> and then drawing that canvas to another one multiple times.

How Does It Work?

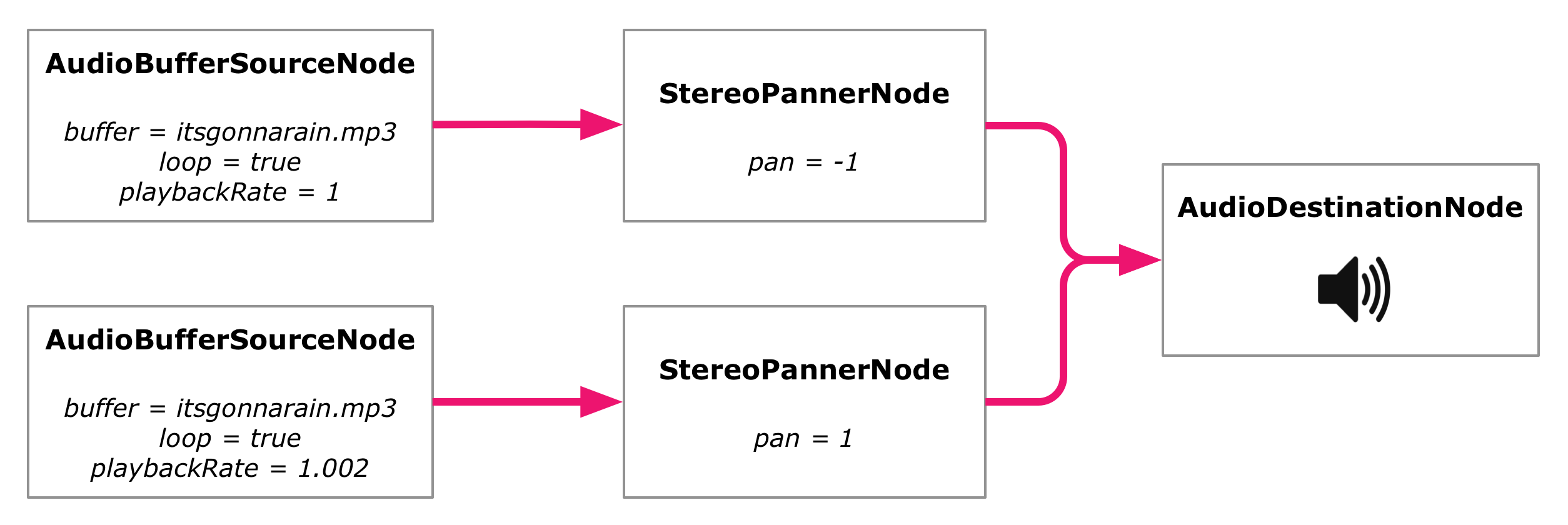

The way you use Web Audio is by constructing an audio signal graph using the building blocks listed above. You need one or more inputs, one or more outputs, and any number of processing nodes in between.

You can construct such graphs using the Web Audio JavaScript APIs. First you create an AudioContext. This is the main entry point to Web Audio. It hosts your audio graph, establishes an audio processing background thread, and opens up a system audio stream:

// Establish an AudioContext

let audioCtx = new AudioContext();

You usually only need one such AudioContext per application. Once you have it, you can use it to create and connect various processing nodes and parameters:

// Establish an AudioContext

let audioCtx = new AudioContext();

// Create the nodes of your audio graph

let sourceLeft = audioCtx.createBufferSource();

let sourceRight = audioCtx.createBufferSource();

let pannerLeft = audioCtx.createStereoPanner();

let pannerRight = audioCtx.createStereoPanner();

// Set parameters on the nodes

sourceLeft.buffer = myBuffer;

sourceLeft.loop = true;

sourceRight.buffer = myBuffer;

sourceRight.loop = true;

sourceRight.playbackRate.value = 1.002;

pannerLeft.pan.value = -1;

pannerRight.pan.value = 1;

// Make connections between the nodes, ending up in the destination output

sourceLeft.connect(pannerLeft);

sourceRight.connect(pannerRight);

pannerLeft.connect(audioCtx.destination);

pannerRight.connect(audioCtx.destination);

// Start playing the input nodes

sourceLeft.start(0);

sourceRight.start(0);

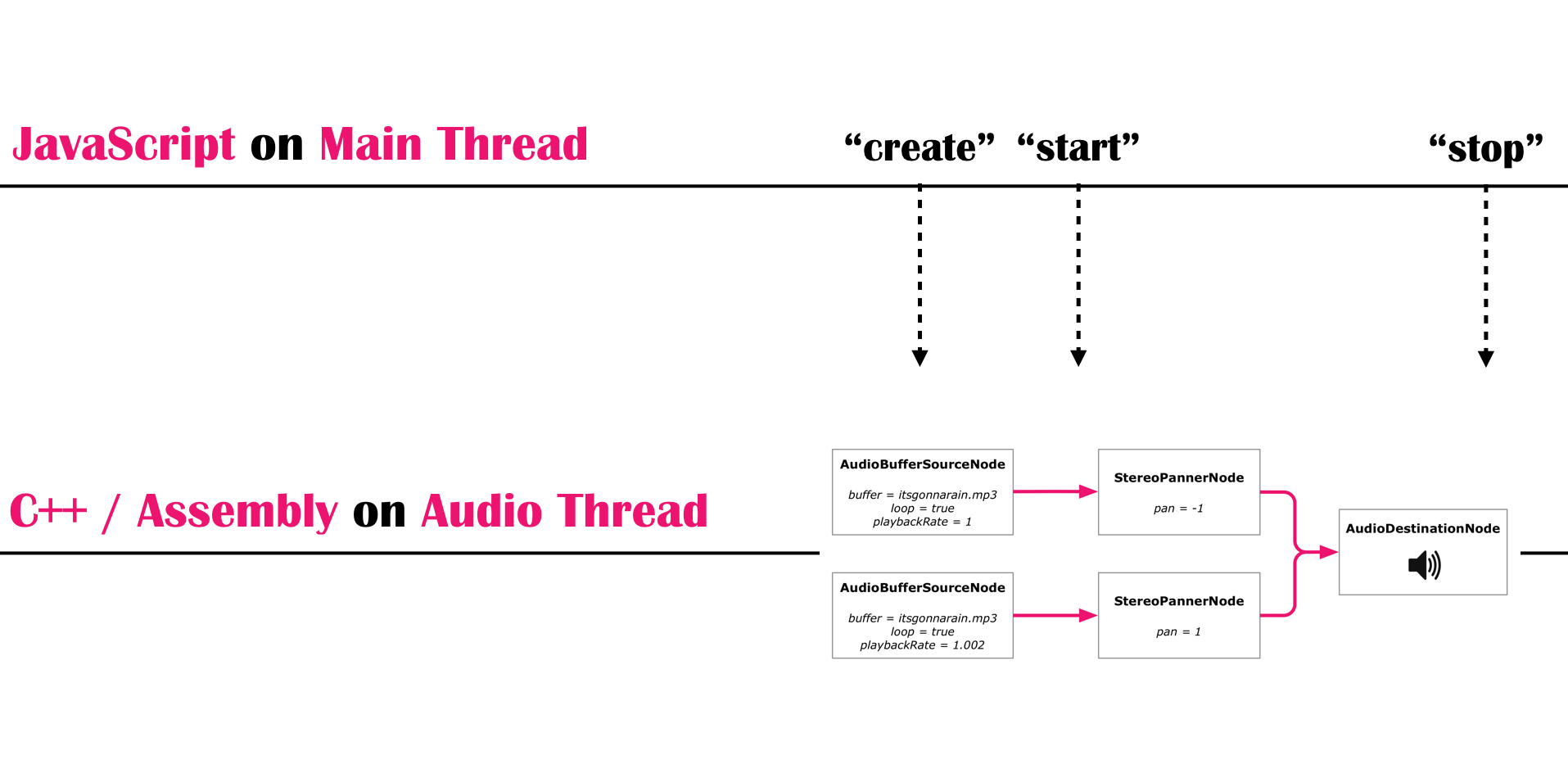

Though you construct the nodes and set their parameters in regular JavaScript, the audio processing itself does not happen in JavaScript. After you've set up your graph, the browser will begin processing audio in a separate audio thread, and uses highly optimized, platform specific C++ and Assembly code to do it.

You can still fully control the graph by adjusting parameters, and creating or removing nodes and connections. But the changes you make are not immediate, and are instead queued up to be applied asynchronously on the audio thread at the end of the event loop.

The heavy lifting is done off your main JavaScript execution context for good reasons: Audio processing is very CPU intensive and you want to do it close to the metal. This also means that on multicore platforms audio processing and UI work can be executed in parallel.

The heavy lifting is done off your main JavaScript execution context for good reasons: Audio processing is very CPU intensive and you want to do it close to the metal. This also means that on multicore platforms audio processing and UI work can be executed in parallel.

The one exception to this separation is when you want to do arbitrary audio signal processing in JavaScript. With the current but deprecated ScriptProcessorNode it's done in the UI thread, which may easily cause latency issues and also takes away processing time from your UI code even on multiprocessor devices. The upcoming AudioWorklet API will improve on this by executing your JavaScript signal processing code on the audio thread.

How Well Is It Supported?

In general, the Web Audio API is relatively well supported across modern browsers. Apart from Internet Explorer and older Androids, things are looking good.

This does not necessarily mean that all features are equally well supported everywhere though. Here are a few things I've run into:

- Since the spec is still changing, implementations differ because they support different versions of the spec. For example, decoding audio buffers now uses ES2015 Promises, but Safari still implements an older, callback-based version of the same API.

- Some features, aren't even in the spec yet and can't be used anywhere. This is the case for

AudioWorklets (there's currently an open pull request for them). - Some of the features mentioned above require other specs to be implemented as well. In particular, stream inputs and outputs are part of Media Capture and Streams spec, which doesn't look quite as green as the Web Audio API. You cannot access the device microphone on iOS, for example.

- There are different bugs on different browsers. For example, iOS has a sporadic issue with sample rates causing nasty distortion. You can work around it though, and they've actually fixed it now so it should be gone soon. But in the meantime it's the kind of thing you just have to figure out.

- On iOS your sounds need to be triggered by a user action, so you can't just begin playing on page load. There's an active discussion about whether a similar restrictions should be added to Chrome on Android.

- As it says in the caniuse.com data, you still need a vendor prefix on Safari.

You can get around many of these issues by using shims that iron out browser differences, or libraries that give you a higher-level audio API that should work the same across browsers (like Tone.js or Howler.js). Some of the restrictions are more severe though, like the lack of microphone access on iOS.

Links and Resources

If you like experimental music and want to learn Web Audio by hacking on something fun, my JavaScript Systems Music tutorial might be of interest to you.

MDN is my go-to source when I'm trying to figure out a particular feature of the API. It has documentation for all the nodes and parameters as they are currently implemented in browsers. When you're dealing with binary audio data buffers directly, you may also find their documentation of typed arrays very useful.

The relevant specs are a good way to dive into how a specific feature actually works:

- There's the Web Audio spec itself.

- Streams are specified in the Media Capture and Streams spec.

- The Web MIDI spec is useful when you want to support MIDI connected devices in your apps.

The Web Audio Weekly newsletter from Chris Lowis is nice if you want to get a semi-regular email digest of what's going on with Web Audio. (There's also a great talk on Youtube he's given about exploring the history of sound synthesis with Web Audio.)

The Mozilla Hacks blog often has interesting audio related content. For example, Soledad Penadés recently published a very useful rundown of recent changes in the Web Audio API.

If you just want to see some cool stuff, check out Google's Chrome Music Lab and Jake Albaugh's Codepens.

This is an introduction to a series I'm writing about making sounds and music with the Web Audio API. It is written for JavaScript developers who don't necessarily have any background in music or audio engineering.

See also:

Tero Parviainen is an independent software developer and writer.

Tero is the author of two books: Build Your Own AngularJS and Real Time Web Application development using Vert.x 2.0. He also likes to write in-depth articles on his blog, some examples of this being The Full-Stack Redux Tutorial and JavaScript Systems Music.