This post is part of a series I'm writing about making sounds and music with the Web Audio API. It is written for JavaScript developers who don't necessarily have any background in music or audio engineering.

First Things First: What Is A Digital Audio Signal?

When you represent a sound wave in digital form, what you have is essentially a big array of numbers. Each number in the array represents the position of the sound wave at a particular point in time, or in other words, its amplitude at that point.

When you visualize the numbers from one of these arrays, you get a waveform, such as the ones you see on Soundcloud. By looking at it you get an idea of the sound wave's amplitude over time.

These arrays are usually very large, since you need a fine-grained sampling of a sound wave in order to capture it accurately. A commonly used sampling frequency for digital audio is 44.1 kHz, which means that a sample is taken 44,100 times per second. A minute of sound takes up an array of 2,646,000 numbers!

What this means is that the kinds of waveforms you see on Soundcloud are really very rough approximations of the original sound waves. The 9-minute Death Hawks song above would have about 23 million samples in it. (Or actually 46 million since it's in stereo and you need two signals: left and right.) So what's actually visualized in a waveform like that are averages of the amplitude over time.

But if you open an audio file in an audio editor like Audacity, and zoom all the way in, you can see the individual samples in there - thousands of them per each second of audio. These sample numbers make up a digital audio signal.

And How Do I Work With Such Signals In JavaScript?

The Web Audio API, like any system that works with digital audio, basically shuffles around little audio buffers stored in numeric arrays. In many cases we don't directly need to process those arrays in JavaScript, but when we do, we use the most efficient API available for working with raw binary data in JavaScript: Typed Arrays. Let's see how that might happen.

The first thing we always do when we want to do anything audio related in the browser is establish an AudioContext. This is our gateway to all things Web Audio:

let audioContext = new AudioContext();

Say we wanted to generate a two-second sound clip. To store that clip, the first thing we need to do is create an AudioBuffer:

let audioContext = new AudioContext();

let myBuffer = audioContext.createBuffer(1, 88200, 44100);

The three numbers used here are:

1- the number of audio channels we want. For mono sound, we only need 1. For stereo we'd have 2.88200- the number of samples the buffer should hold. We want two seconds worth of audio and we have a sample frequency of 44,1 kHz.44100 * 2 = 88200.44100- the sample rate of the buffer - 44.1kHz

When we have an AudioBuffer, we can ask it to give us the raw audio data array for a given channel:

let myArray = myBuffer.getChannelData(0);

The 0 means we're getting the first channel, which in this case is also the only channel. If we were working with stereo sound, we would have to get a separate array for the second channel.

The myArray variable now holds a typed array, which has been created by the Web Audio API. It's a Float32Array with length 88200, to be exact. We can put our 88,200 numbers in it, and the audio signal formed by them is what will be played when the AudioBuffer is played back. We can, for example, go and generate those samples in a loop:

for (let sampleNumber = 0 ; sampleNumber < 88200 ; sampleNumber++) {

myArray[sampleNumber] = generateSample(sampleNumber);

}

But how exactly can we generate audio samples that actually produce something audible, let alone recognizable or even musical? Today we'll discuss one basic way to do that, which is by producing a sine wave.

What Is A Sine Wave?

A sine wave (often also called a sinusoid) is one of the most basic building blocks of audio signals. They're easy to generate, transform, and combine, which makes them a very useful primitive for all kinds of purposes.

To make a sine wave, we first need to talk about angles and the sine function. And to approach that, we need circles. That's because the sine function has an intimate relationship with the so-called unit circle. Here's how the two are connected:

- Draw a circle with a radius of

1, whose center is at the origin(0,0). - Draw a line from the circle's centerpoint to anywhere on the circle's circumference.

- An angle forms between that line and the positive x-axis. Depending on where you drew the line, the angle could be anything between

0°to360°degrees, or0to2πradians. (Radians will be the unit of measure more useful to us). - To find the sine of that angle, find the y coordinate of the point where the line meets the circle's circumference. The sine's value could thus be anything between

-1and1.

See how the sine forms from different angles:

So, the way sine works is that as the angle changes, it oscillates between -1 and 1. It's positive half of the time and negative the other half, depending on whether the endpoint of our line is currently above or below the x axis.

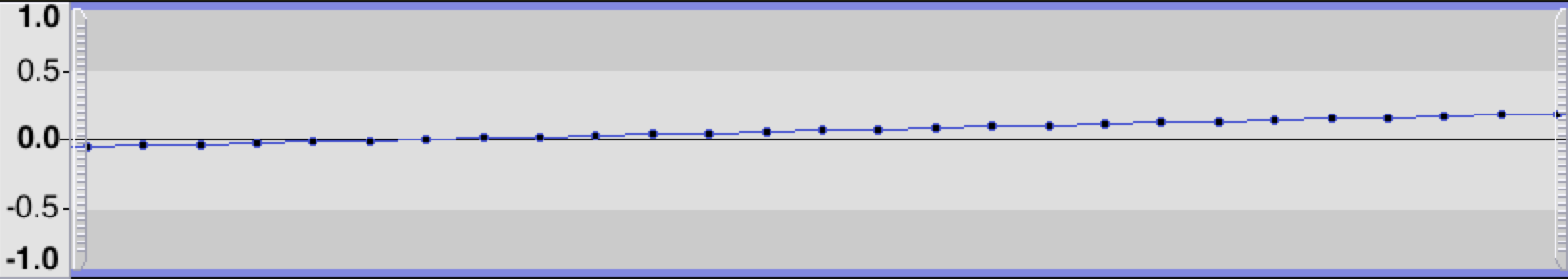

Now, imagine a process that grows the angle constantly over time, gradually revolving it round and round the circle. If we follow that process and record all the sines that we get along the way, we get a sine wave:

What we have here is an oscillator: A process that produces a repeating signal. In this case the signal is a sinusoid.

How Can I Make A Sine Wave Signal with Web Audio?

In order to represent and play a sine wave in Web Audio, we need to turn it into one of those numeric arrays we talked about, essentially capturing samples of the continuous wave over time.

There are basically two ways we could do this: There's a hard way, which is to calculate the values manually. And there's an easy way, which is to let the Web Audio API do it for us. The benefit of doing it the hard way is that then we learn much more about how this "samping" actually works.

OK, so How Do I Make One the Hard Way?

Let's talk about the frequency of the sine wave. What we mean by that is "how many times per second does the circle go all the way around"? Or alternatively, "how many times per second does the resulting wave go all the way up, then all the way down, and back again"?

In the animation above this happens once per second, so the frequency of that wave is 1 Hz. For an actual sound wave, this is way, way too slow. For audible signals we need to start talking about waves that are much faster than that: 10s, 100s, or 1000s of Hz.

A good frequency for us to use today is 440Hz. This is the so-called A4 note, commonly used as a standard note for tuning musical instruments. We can produce this note with a sine oscillator of 440Hz, meaning one that goes around full circle 440 times per second. 440Hz is its so-called real-time frequency.

Another useful way to think about how fast this thing oscillates is its angular frequency, meaning how many radians per second does it go?. Since we're doing 440 circles per second and there are 2π radians in a circle, the radial velocity is 440 * 2 * π ≈ 2764.6 rad/s. Here's how we can express these frequencies in code:

const REAL_TIME_FREQUENCY = 440;

const ANGULAR_FREQUENCY = REAL_TIME_FREQUENCY * 2 * Math.PI;

We want to use this oscillator to fill up the array we initialized earlier - the one for the two-second audio buffer of 2 * 44100 = 88200 samples. That is, we need to write the generateSample function that gets called from this loop:

for (let sampleNumber = 0 ; sampleNumber < 88200 ; sampleNumber++) {

myArray[sampleNumber] = generateSample(sampleNumber);

}

That function is going to be called 88200 times. The first thing we need to do there is convert the sample number into the sample time in seconds. This measures the time between the beginning of the buffer and this sample. In this case it will be a number between 0 and 2, since our sample is 2 seconds long. We can do get the sample time by dividing the sample number by our sample frequency, 44,100:

function generateSample(sampleNumber) {

let sampleTime = sampleNumber / 44100;

}

Then we need to figure out what the angle of our oscillator is at this point in time. Here we can plug in the angular frequency that we calculated earlier. The sample angle will be the angular frequency multiplied by the sample time. (Just like the distance travelled by a moving car is the car's velocity multiplied by elapsed time.)

function generateSample(sampleNumber) {

let sampleTime = sampleNumber / 44100;

let sampleAngle = sampleTime * ANGULAR_FREQUENCY;

}

The value of the sine oscillator is then simply the sine of this angle. We can just return it, which means it'll end up in the audio buffer:

function generateSample(sampleNumber) {

let sampleTime = sampleNumber / 44100;

let sampleAngle = sampleTime * ANGULAR_FREQUENCY;

return Math.sin(sampleAngle);

}

With this we've calculated two seconds worth of samples from a sine wave that oscillates at 440Hz. And now that we've got those samples in a buffer, we can play it back. We can do so using a Web Audio AudioBufferSourceNode, which is an audio node that knows how to play back an AudioBuffer object.

Here's the complete source code that plays back two seconds worth of a sine wave.

const REAL_TIME_FREQUENCY = 440; Run / Edit

const ANGULAR_FREQUENCY = REAL_TIME_FREQUENCY * 2 * Math.PI;

let audioContext = new AudioContext();

let myBuffer = audioContext.createBuffer(1, 88200, 44100);

let myArray = myBuffer.getChannelData(0);

for (let sampleNumber = 0 ; sampleNumber < 88200 ; sampleNumber++) {

myArray[sampleNumber] = generateSample(sampleNumber);

}

function generateSample(sampleNumber) {

let sampleTime = sampleNumber / 44100;

let sampleAngle = sampleTime * ANGULAR_FREQUENCY;

return Math.sin(sampleAngle);

}

let src = audioContext.createBufferSource();

src.buffer = myBuffer;

src.connect(audioContext.destination);

src.start();

The four steps taken there to play the sound are:

- Ask the

AudioContextto create a newAudioBufferSourceNode. - Assign our

AudioBuffer, which now contains the sine wave data, as the buffer the source node should play. - Route the audio signal from the source to the built-in

AudioDestinationNodeof the audio context. That's going to send it to the sound card and makes it audible. - Start playing the source node.

The result of this is that the 88,200 numbers in the Float32Array are streamed into the AudioDestinationNode of the AudioContext, 44,100 samples per second, over two seconds. This will cause the signal to be sent them over to your sound card's digital-to-analog converter, and from there as an analog audio signal to your speakers, and then as air vibrations to your eardrums. We've essentially written a script that transfers a sine wave from your web browser to your brain. Not too shabby!

And How About The Easy Way?

Now we now how we can generate a two-second clip of a sine wave using JavaScript. But that's a very cumbersome and resource-intensive way to do it, for at least a couple of reasons:

- We're doing all that calculation in JavaScript code. No matter how fast the JS engine might be, it's easy to see why this can become a performance problem.

- We're very constrained with relation to the kinds of things we can do. What if we need a continuous sine wave that could run for hours on end? Or what if we need hundreds sine waves of different frequencies? Our current code is not going to cut it.

Luckily the Web Audio API has an abstraction that can do this for us: The OscillatorNode can emit a continuous audio signal that contains a sine wave. This is pretty much always preferable to the manual approach, for the following reasons:

OscillatorNodeis much, much more efficient. It does its work in native browser code (C++ or Assembly) rather than in JavaScript.OscillatorNodeworks in the background, in the browser's audio thread. It doesn't take up time from your JavaScript thread.- You can easily make a continuous wave that can run forever, instead of just filling up a buffer of a predefined duration.

- You don't have to do the math yourself. You just give

OscillatorNodea real-time frequency inHzand it will oscillate on that frequency.

Here's how we can use an OscillatorNode to make the same sine wave as before:

const REAL_TIME_FREQUENCY = 440; Run / Edit

let audioContext = new AudioContext();

let myOscillator = audioContext.createOscillator();

myOscillator.frequency.value = REAL_TIME_FREQUENCY;

myOscillator.connect(audioContext.destination);

myOscillator.start();

myOscillator.stop(audioContext.currentTime + 2); // Stop after two seconds

Now we don't have all that code for generating individual samples. But it's good to understand that it is still happening behind the scenes. There's some native browser code running in the audio thread, which is generating sine wave samples and putting them into tiny buffers of 128 samples each. Those buffers are then being streamed to the sound card. And we never need to see any of that in our JavaScript code.

So that's how you make a sine wave. In the next article we'll talk about dynamically changing the wave frequency and how that affects the pitch of the sound.

Tero Parviainen is an independent software developer and writer.

Tero is the author of two books: Build Your Own AngularJS and Real Time Web Application development using Vert.x 2.0. He also likes to write in-depth articles on his blog, some examples of this being The Full-Stack Redux Tutorial and JavaScript Systems Music.